Quantum mechanics was invented in the early years of the

twentieth century to explain measurements made of very simple systems involving

just a very small number of basic particles, like photons,  atoms or electrons.

It was able to make predictions of experiments that could not be explained

using Newtonian mechanics. Newtonian mechanics is the theory that explains the

macroscopic world, like how a baseball flies, or why the earth circles the sun.

atoms or electrons.

It was able to make predictions of experiments that could not be explained

using Newtonian mechanics. Newtonian mechanics is the theory that explains the

macroscopic world, like how a baseball flies, or why the earth circles the sun.

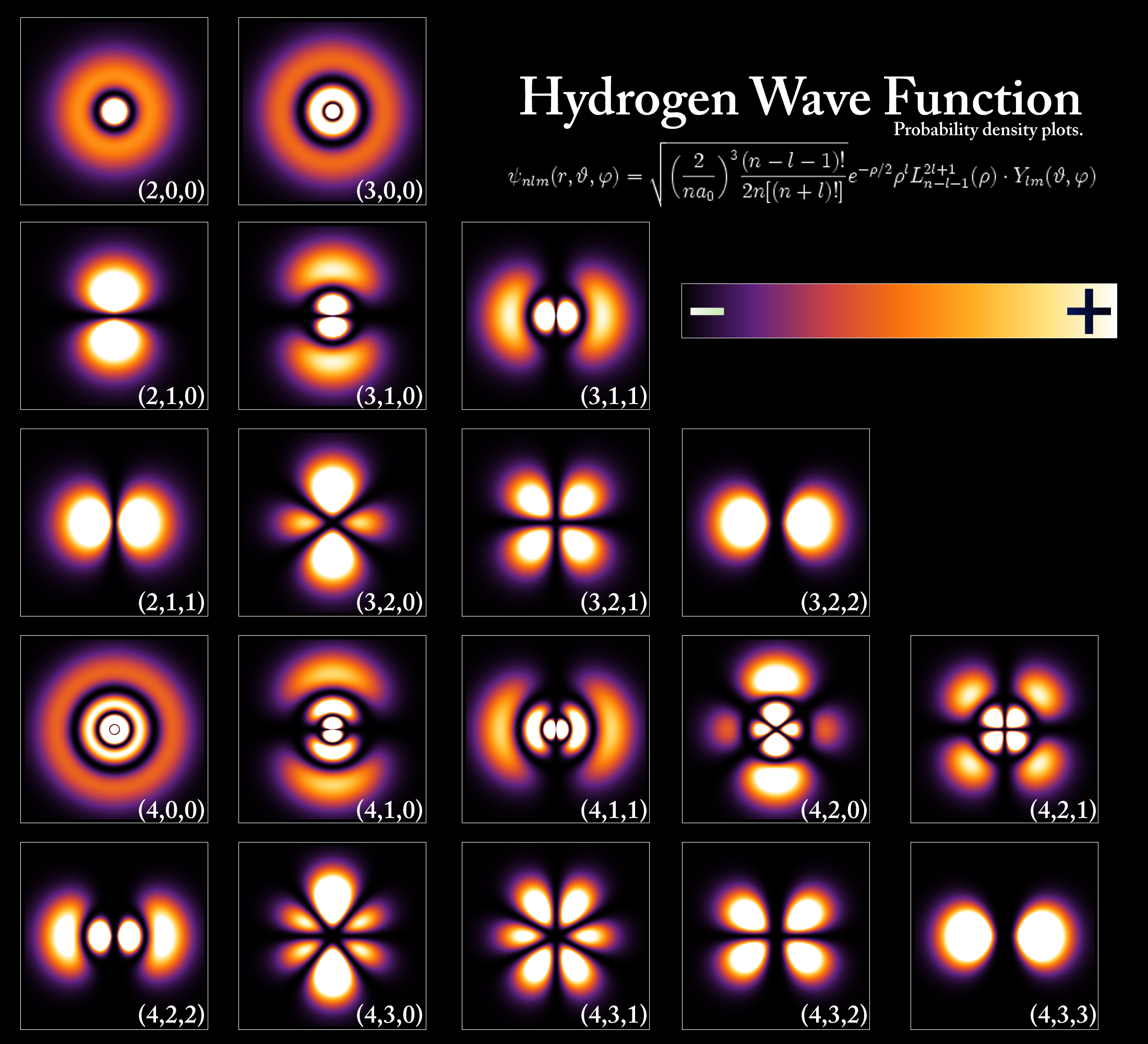

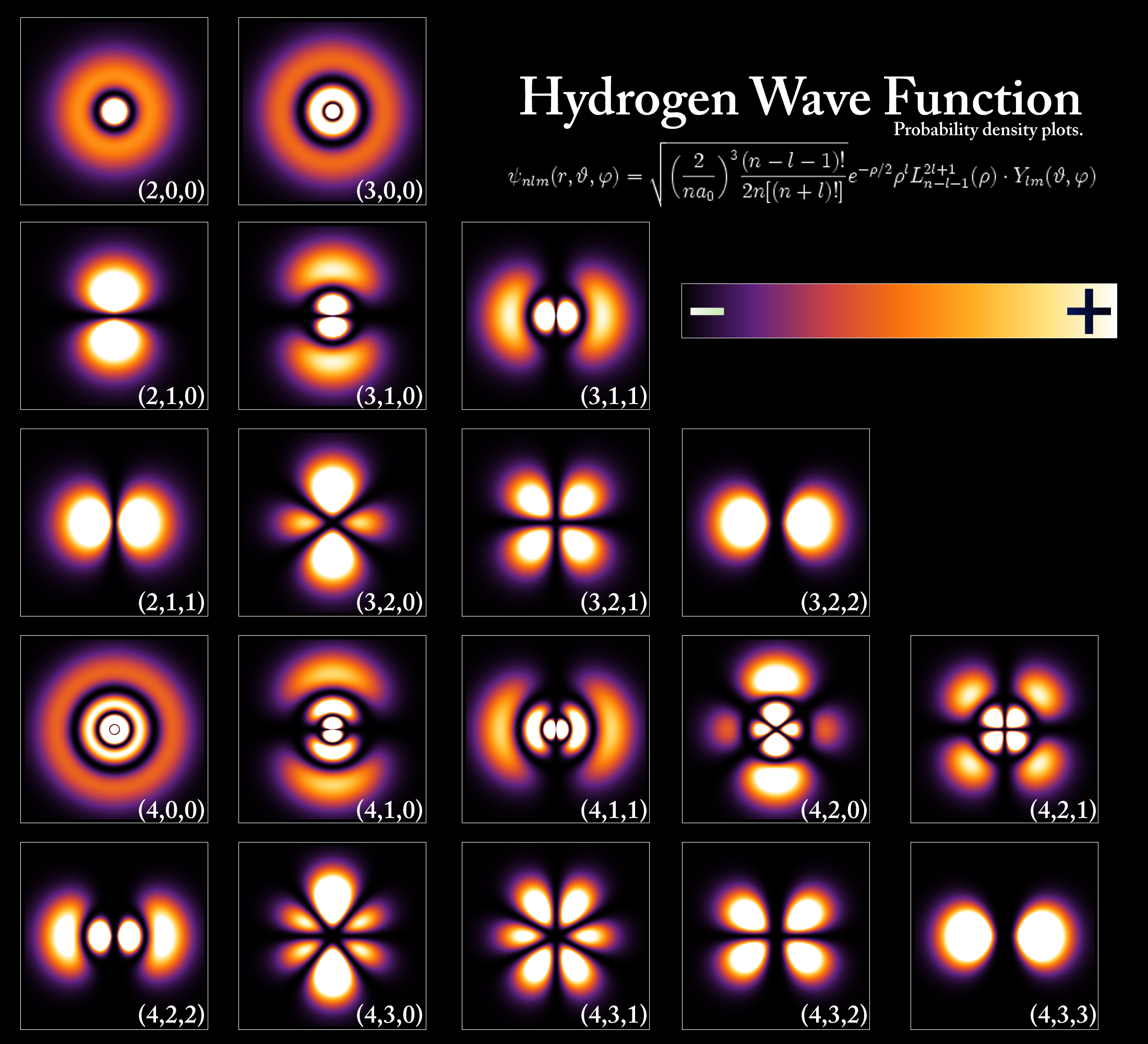

At the heart of quantum theory is the wavefunction, which encapsulates

everything that is know about the quantum mechanical system. From the

wavefunction it is possible to calculate where each particle is most likely to

be, or how fast it’s likely to be moving. These properties cannot be calculated

exactly because quantum mechanics tells us that this microscopic world is

inherently unpredictable. That is to say that the wavefunction may be prepared

in such a way that it is exactly the same each time, but the properties of the

system will have some variation in their values each time they are measured.

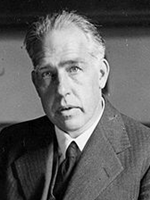

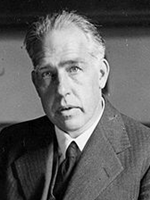

This naturally leads to the question of just exactly what is

the wavefunction. In the early years of quantum theory there was much debate on

this subject. This debate was largely silenced in the 1920’s when a group of

esteemed physicists, lead by Niels Bohr, came up with a way of thinking about

the wavefunction that was accepted by most physicists. They argued that the

only thing that physicists are able to do is to make measurements of the

system, and that what is happening to the system in between these measurements

is unknowable. Furthermore, the wavefunction should only be considered as a

mathematical tool to predict the results of future measurements. This viewpoint

became known as the Copenhagen Interpretation, and to date is still the

conventional view held by most physicists.

Bohr was correct in his interpretation using the paradigms

that existed at that time. Measurements were thought to destroy the

pre-measurement wavefunction and, which was then recreated in a new form.

Indeed, that seems to be the case whenever the system a measurement is made to

maximize the precision of the measured value.

Bohr was correct in his interpretation using the paradigms

that existed at that time. Measurements were thought to destroy the

pre-measurement wavefunction and, which was then recreated in a new form.

Indeed, that seems to be the case whenever the system a measurement is made to

maximize the precision of the measured value.

In 1988 a new theory was developed that considered

measurements that were made with less precision. As you might imagine, the

strength of a measurement may be turned down, and as you get down to zero

strength the measurement is not being made at all. When a measurement is not

made, the wavefunction is not destroyed, and can propagate without changing

form. So what happens in between the extremes of a strong measurement and ‘no

measurement at all’. This is the regime that has been come to be known as ‘weak

measurement’.

With weak measurements, it’s possible to learn something

about the wavefunction without completely destroying it. As the measurement

becomes very weak, you learn very little about the wavefunction, but leave it

largely unchanged. This is the technique that we’ve used in our experiment. We

have developed a methodology for measuring the wavefunction directly, by

repeating many weak measurements on a group of systems that have been prepared

with identical wavefunctions. By repeating the measurements, the knowledge of

the wavefunction accumulates to the point where high precision can be restored.

So what does this mean? We hope that the scientific

community can now improve upon the Copenhagen Interpretation, and redefine the

wavefunction so that it is no longer just a mathematical tool, but rather

something that can be directly measured in the laboratory.

atoms or electrons.

It was able to make predictions of experiments that could not be explained

using Newtonian mechanics. Newtonian mechanics is the theory that explains the

macroscopic world, like how a baseball flies, or why the earth circles the sun.

atoms or electrons.

It was able to make predictions of experiments that could not be explained

using Newtonian mechanics. Newtonian mechanics is the theory that explains the

macroscopic world, like how a baseball flies, or why the earth circles the sun.

Bohr was correct in his interpretation using the paradigms

that existed at that time. Measurements were thought to destroy the

pre-measurement wavefunction and, which was then recreated in a new form.

Indeed, that seems to be the case whenever the system a measurement is made to

maximize the precision of the measured value.

Bohr was correct in his interpretation using the paradigms

that existed at that time. Measurements were thought to destroy the

pre-measurement wavefunction and, which was then recreated in a new form.

Indeed, that seems to be the case whenever the system a measurement is made to

maximize the precision of the measured value.